A Look At Distortion Mechanisms

This paper is continued from the introduction to Understanding Distortion: A Look At Electronics.

Harmonic Distortion

Harmonic Distortion is a non-linear behavior occurring not only within the world of electronics, but among acoustic phenomenon as well. This behavior is characterized by multiples of the input signal(s) which were not present in the original input waveform. Commonly abbreviated as H.D, a harmonic is an additional signal whose frequency is an integral whole-number multiple of the frequency of a fundamental (input) reference signal.

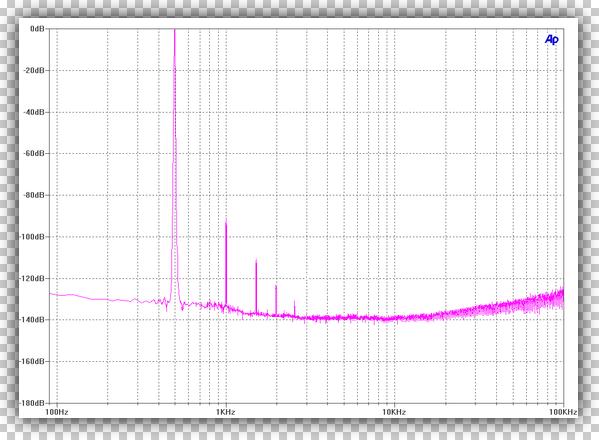

Harmonics can be even and odd ordered, and often cover a wide upward bandwidth. For a signal whose fundamental frequency is referenced as f1, the second harmonic would have a frequency twice as high, ie: two times f1. A third harmonic would have a frequency three times as high as f1, and so on. This can be demonstrated by starting with a signal of 1000 cycles per passing second (1kHz). The second and third harmonics for this fundamental would be 2kHz and 3kHz. If the signal is complex and comprised of a multitude of fundamentals, there can also be harmonics for each and every one. Higher even-orders of harmonics include 4th, 6th, and 8th harmonics, and higher odd-orders include 5th, 7th, and 9th harmonics.

As the harmonic spectrum becomes richer with new harmonics, the waveform takes on a more complex appearance, indicating greater deviation from the ideal original one. A closely sorted and high-level harmonic spectrum may completely obscure the intended input signal. In the case of such input being sinusoidal, it can effectively distort the signal, thereby making the waveform unrecognizable. In this way, it would be important to control, if not minimize the relative intensity of such distortions.

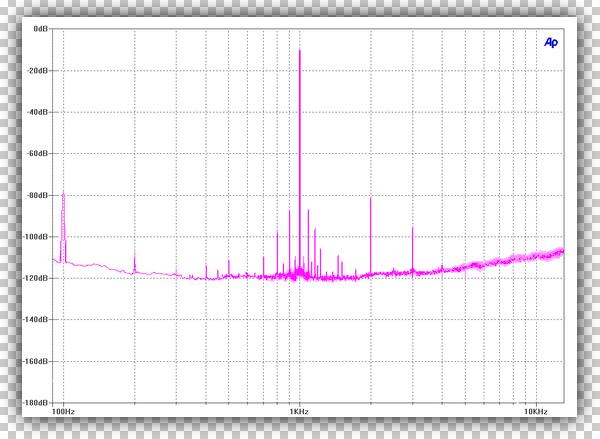

In the consumerist electronics industry, it is rare to see Harmonic Distortion presented in a manner that individually identifies each harmonic. The standardized measure for distortion in electronics is Total Harmonic Distortion, which uses a single numerical expression based on steady-state sinuous waveform signals in accordance with IHF standards. Often abbreviated as T.H.D, it is the summed total of all relevant harmonics then expressed as a logarithmic percentage of the total signal magnitude. While the harmonic spectra holds great importance during design, T.H.D provides a quick reference to the maximum levels of harmonic distortion. The T.H.D rating of a power amplifier, or other device, refers to the creation of unwanted harmonics by the device during it’s intended and most linear mode of operation.

Second and third-order harmonic distortions are the most common types naturally occurring in stages of signal amplification. They are also typically the highest in magnitude. This holds true for both solid-state and hollow-state technologies, and they reside among the most easily modeled forms of non-linear behavior. The supporting circuitry within a stage of amplification can be tailored to reduce, enhance, or modify the sequential orders of harmonic distortion, with second-order harmonics being the easiest to reduce to vanishingly low levels.

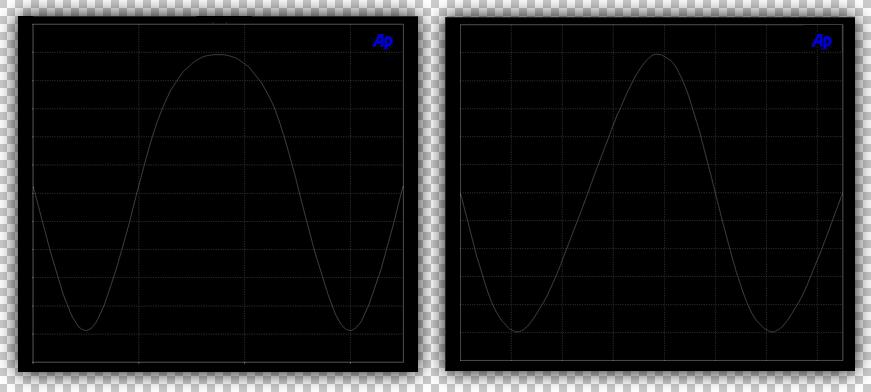

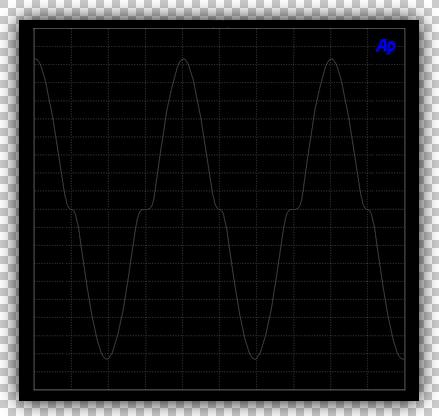

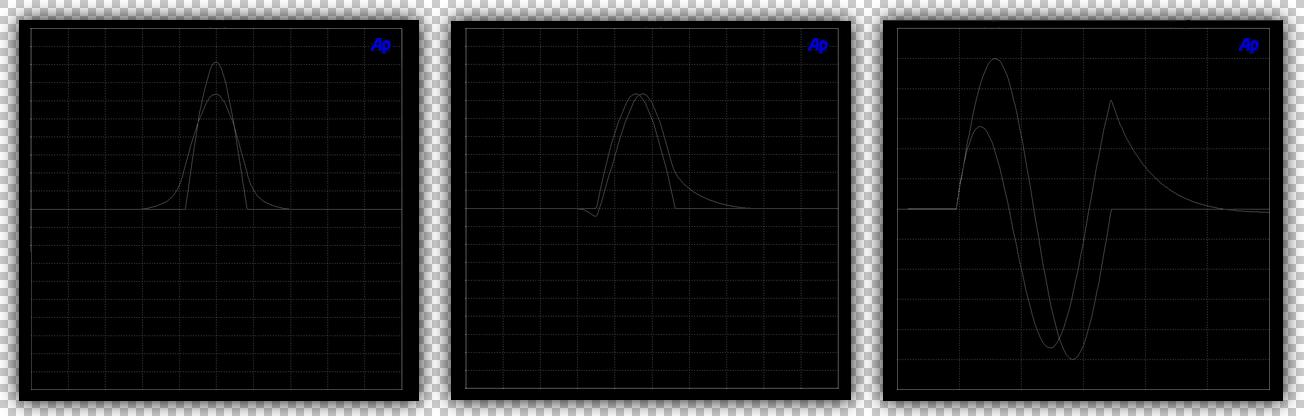

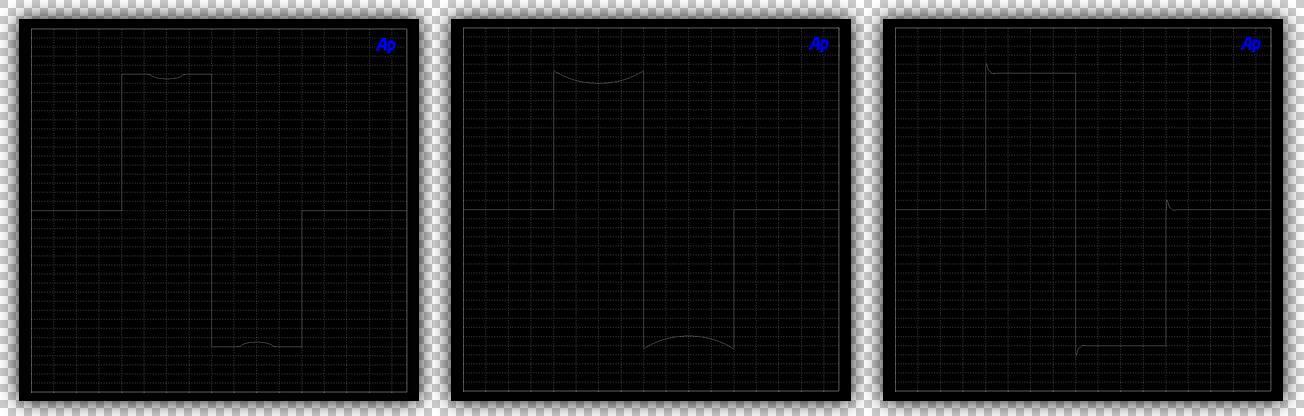

Depending on the phase of a second-order harmonic, it can impose varying effects on the original signal. Should the harmonic be in phase, it can tilt the waveform by sharpening the positive 0-90 degree and 180-270 degree slopes while flattening the points at 90-180 degrees and 270-360 degrees of each cycle. If the harmonic is out of phase with the signal, it can produce tilted waveform in the opposed fashion. If the harmonic is 90 degrees out of phase it can cause one half to becomes rounded while the other is sharpened, as depicted in the first Figure above. These are very different effects which have received very little discussion in the public eye.

For several decades, it has been more or less accepted that second order may be the most tolerable distortion to the human auditory system. Recent years have brought the concept of even ordered harmonics being perceived as better sounding into question. Along with this past belief was one that solid-state devices produced the most pronounced spectrum of high-order harmonic content. Measurements validate that such claims are mistaken, and quite contrary. The de facto remains that hollow-state typically produces the highest levels of high-order content, both even and odd-ordered. If the goal is low or tailored harmonic distortion, it is very attainable. What is important, if that that third-order harmonics are now being looked at in a different light. Under certain conditions, they can been interpreted as equally acceptable in listening, and sometimes even more so. In addition, nearly all carbon fiber loudspeaker drive units, electrostatic loudspeakers, and many tetrode & pentode amplifiers exhibit predominantly third ordered harmonics. Let’s take a few moments to investigate this order of harmonics further.

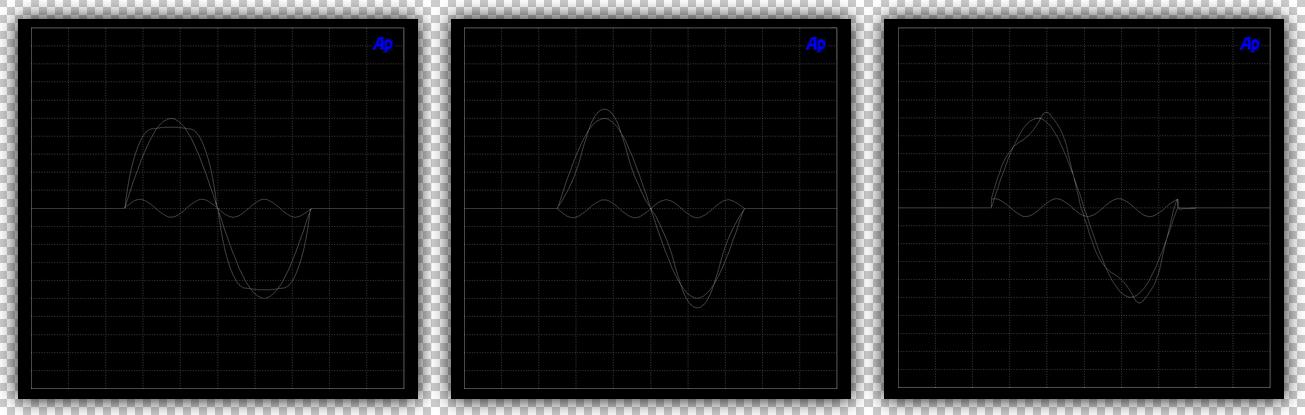

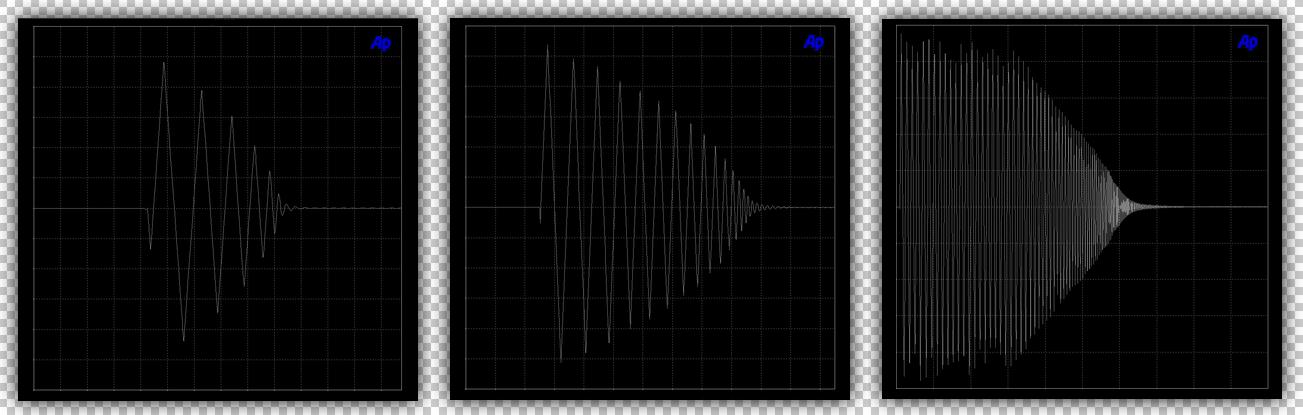

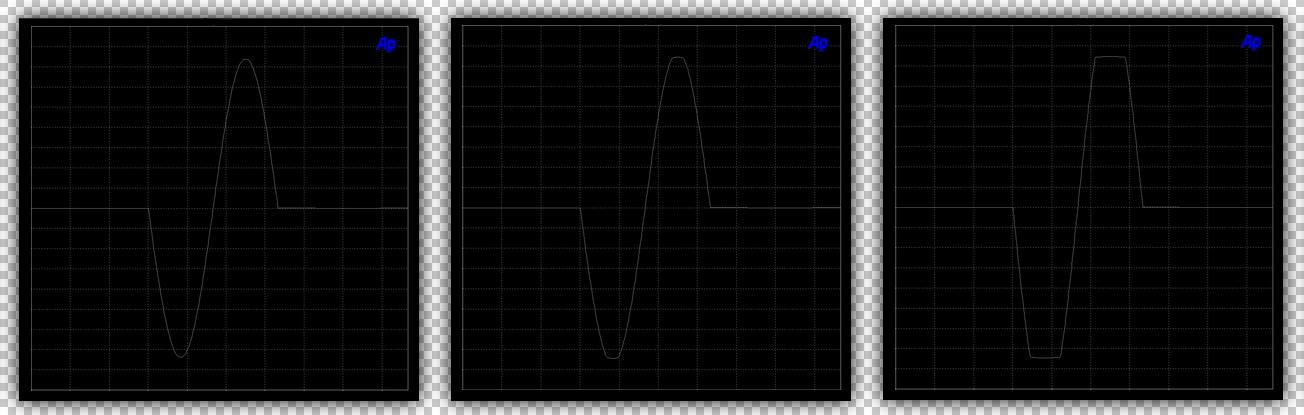

Above, the locked figures depict three instances of the same fundamental frequency. Each is superimposed with approximately equal quantities of third-order harmonic distortion. The level of distortion is higher than we find in production amplifiers and is used for illustrative purposes only. The first image contains a large input signal, a smaller third-octave harmonic that is in-phase, and is the more common type of third-order distortion present in amplifier designs. Also shown in the first picture is the cumulative effects of flattening upon the larger input signal. The positive magnitude (upward swing in the images above) combines with the smaller harmonic to form a steep rising and falling waveform profile upon the tone, now with a newly-reduced peak value. In other words, the crests are flattened by the harmonic. This also occurs in the negative downward swing.

The second Figure to the right serves to represent how an out of phase third ordered harmonic can bring the primary tone to resemble a triangular waveform whilst it increases the peak value. In other words, changing the phase causes it to take an opposite shape. In Figure three, we see something rarely viewed or mentioned: a third-ordered harmonic who’s phase is independent from the primary stimulus, and for this scenario it resides at 80 degrees. Taking a long look above at these waveforms above and notice how much they change. While they are completely different from one another, each one appears identical in traditional Fast Fourier Transform analysis (F.F.T), thus exposing the first limitation of the common method of measurement, which I brought to light some years ago.

Third-order harmonic distortion is somewhat unique, because it is an order that can both decompose and accentuate the periodic function of a waveform with a mere change in phase. Thus, it creates a new hyperbolic or parabolic profile from the apparent difference of the two waveforms. Several year ago we found that, within a centric scenario where a third-order harmonic begins as an event with the same point and yet is out of phase with a fundamental tone, the hyperbolic effects did not act to flatten the crests of the signal. The results closely resembled that of second order harmonic. Later, several suborder classifications were issued to tangential third-ordered harmonic distortions, alone.

Building on this, it had been possible to create a number of amplifiers with equal magnitudes of third-ordered harmonics that measure the same in F.F.T testing, but which sound different from one another. This could be accomplished by altering the sequence of sine and cosine phase relationships between the fundamental signal to be amplified, and that of the source of the new harmonic multiple.

Higher order harmonic distortions can be even or odd-ordered. Where they bare no immediate correlation with the intent of primary signals, they are generally considered undesirable in the scope of audio reproduction. These tend to be quite low in amplitude within solid state designs. Yet, they can be pronounced in hollow state circuitry, in some cases descending only thirty-five decibels by the tenth order. In other words, they can be audible. Such is not to state any purported superiority of one above the other, but rather to clarify the nature of their behavior.

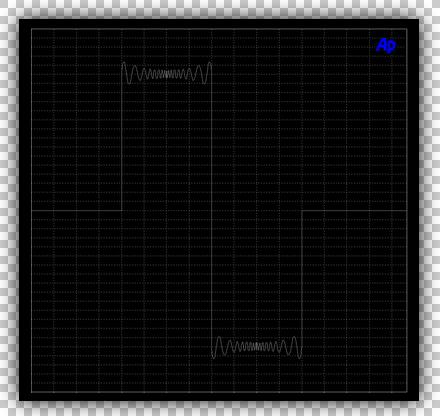

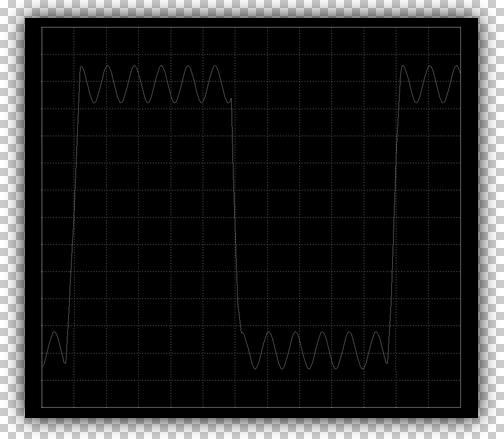

What is important about higher order Harmonic Distortion, is that when it has been permitted to reach high magnitudes the original sinusoidal waveform is gradually converted to a near-square wave profile. An actual square waveform will never occur normal audio playback unless the amp clips, but the affects are still important. It is rare for higher harmonics to occur alone, and those effects can be compounded to flatten the crest of an otherwise smooth and round sine signal. Adding to this, varying the magnitude of each order can produce waveforms that are slightly saw tooth or triangular in shape, as previously shown. Some types of distortion remains within a specific order and yet modulate in amplitude at the primary frequency, as depicted in the figure above. These stochastic behaviors appear ambiguous within the scope of most conventional F.F.T tests performed by conventional distortion analyzers. However, as can be seen here and possibly for the first time in the public domain, they are not one and the same, and they require that the tester realizes this.

In closing on this section, the fate of the life as we know it hangs in the balance of three things we have learned: Red is the highest maintenance color for a car, no matter how large that backyard deck is it’s still too small, and finally, if all the energy in a sinusoidal signal is contained at the fundamental frequency, then that signal is a perfect sine wave. However, if the signal is not a perfect sine wave, as it never is, then some energy must be contained in the distortion. In this way, energy is neither created nor destroyed, but remains a constant, and existing distortions can be accounted for. However, this does not imply that all distortions are simple harmonic multiples or can be described through this measurement alone.

Crossover Distortion

Another source of harmonic distortion is called crossover distortion. To fully understand the source, it is imperative that three amplifier classifications briefly be disambiguated for clarity. There are several alphabetical classifications for the operation of amplifiers, and they relate to the requirements and methods of biasing the active amplifying devices. Biasing the active devices moves them further away from their initial turn-on region and higher along their transconductance curves into their linear mode of operation. For audio reproduction, the bulk of amplifiers will be of the transimpedance operation common emitter and common cathode varieties.

Classification A amplifiers can make use of a single device, or multiples thereof in parallel, to conduct the entire 360 degrees of an audio waveform. They can also be built using even-numbered complimentary pairs of devices. In either of these cases, they are biased at the center point of their load line to a level that is half their intended maximum signal swing, known as the Q-point. This is done to permit symmetrical forward and reverse current signal reproduction.

Classification B amplifiers essentially always use even-numbered quantities of complimentary devices, with one reproducing the positive half and the other reproducing the negative half of the waveform. When one is reproducing half the waveform, the other is completely turned off and ceases to conduct. This type of amplifier is not biased, and typically is not intended for audio reproduction.

Class AB amplifiers employ even-numbered devices like Classification B types. However, AB designs bias the devices along the load line at a position that is above the “crossover region” where each device meets at 0, 180, 360 degrees, but the bias remains below the center of the load line. This constant-powered state causes each half of the complimentary pair to conduct for less than 360 degrees, but more than 180 degrees (half the full cycle), working to reduce and even eliminate switching transients. These amplifiers do not have to be biased near half their intended maximum signal swing, since the bias merge point between both devices forms this point. Even so, some Class AB amplifiers can be biased high enough to operated in true Class A.

While it may not be clear yet, Crossover Distortion can only occur when waveforms and signals are reproduced at current levels greater than the quiescent bias of an amplifier that use complimentary devices. When decomposed to its lowest common denominator, it can only exist where there is a transition, or “crossover” between two active amplifying devices, such as in Classification B and AB amplifiers. Crossover Distortion is quantified as a stationary harmonic distortion component that remains at a constant level and frequency, regardless of the input level and signal frequencies. The constant nature of this harmonic contribution means that lower level audio signals could be distorted more than larger ones, as a function of proportions. Put into perspective, the first one-hundredth of a Watt may be very distorted, while the one-thousandth Watt is exemplary. However, Crossover Distortion cannot exist in the power ranges below or equal to the quiescent bias value.

The lower voltage regions of some older devices exhibited varying degrees non-linearity. As such, should the bias voltage and current be too low in use, then, as the signal crossed the zero voltage potential points of 0, 180, and 360 degrees, crossover distortion could be introduced onto the signal. This became known as the solid-state sound of that time, although it was just as prominent in hollow-state designs that were biased equally. Devices dating from the nineteen seventies would have required exponentially higher quiescent values and still would never have achieved the unprecedented linearity available just one decade later. Very few audio companies provided harmonic distortion results at very low power levels, and this concealed the sheer magnitude of crossover distortion that was present. One company that would be the exception and deserving of recognition for their efforts, would be Yamaha Corporation, Japan. Working with Toshiba Semiconductors, Yamaha’s engineers literally wrote the books on measuring distortion and how to minimize their effects at low, high, and all levels in between.

Today, semiconductors and active devices have benefited from decades of electrochemical and physics research. It is because of the hard work put into the theoretical and physical, that crossover distortion among classification AB amplifiers is now largely a non-issue when properly accounted for. For many modern semiconductors, this linear region of transconductance is achieved with relatively small voltages and currents, and biasing eliminates the Crossover Distortion completely. As such, competently engineered Class AB amplifiers are capable of truly superlative performance, while those that have not, are not.

Most amplifiers employ potentiometers to allow adjustment of the bias points of amplifier’s voltage pre-driver and output stages. These center-tapped resistive controls allow the user to locate the practical balance between distortion and waste energy, but care must be taken that the quiescent current is neither too low, nor too high. There is a wide range where the bias level can reside with safety, but too low will allow the devices to turn off, imposing a variety of sharp transients on its output. Should the bias be too high, it could result in the premature failure of the devices being biased, or increase the induction noise from the associated power supply. Some designs feature automatic biasing that increases in steps with the output level. A very limited number of premier technology designs can even dynamically alter the bias in accordance with signal demand.

Intermodulation Distortion

Intermodulation Distortion is another non-linear behavior that can be found within electronics and stages of amplification. Abbreviated as I.M.D, this distortion is characterized by the appearance of an output waveform that is carrying bands of secondary emission frequencies, all equal to the sums and differences of integral multiples of two or more frequencies comprising the original input signal. As complex as this may sound, once one has a firm grasp on the origins and nature of the distortion they realize it is not so confusing. The primary difference between I.M.D versus harmonic distortion is that two or more different frequencies must be actively present to produce Intermodulation Distortion. This is different than the nature of harmonic distortion, which needs but one frequency to be present in order to form. Adding to this, Intermodulation Distortion products may not always be harmonically related to the original frequencies.

When a device under test is subjected to congruent signals – that is to imply that there are more than one signal at the same time – non-linearities inherent in that device will produce additional signal content at frequencies other than those present on the input. We have previously covered how an amplifier can produce even and odd-orded harmonic distortions, each being an integral multiple of the fundamental signal. In addition those, the Intermodulation Distortion will manifests as second-order and third-order emissions at every combination of first-order and second-order products, which are unrelated to the original signal. Adding to the harmonics, widely new spread second-order sub harmonic distortion products may occur below the primary signals.

The standard measure for this phenomenon is based on two-tone stimulus, best represented by an F.F.T graph. The Society of Motion Picture Television Engineers (SMPTE), DIN, and CCIF set the accepted industry standards for these tests, with the two primary classifications being I.M.D 2 and I.M.D 3 for the second and third orders of Intermodulation, respectively. The nature of this type of distortion is somewhat complex at first take, but it is quite easily and reliably modeled through convolution theorems.

Two of the most challenging distortion products in I.M.D tests are the additional signal content formed due to third-order distortion occurring directly adjacent to the two input tones. Regarding modulated signals, that is to say in two or more mixed signals with one or more following a pattern of varying amplitude, the third-order distortion creates additional frequency content in bands adjacent to the modulated signal, some subject to periodic summation. This distortion is known as Spectral Regrowth, and on an F.F.T graph it may be visually identified as a fattening and widening of noise spectra surrounding the primary tones.

There are a variety of means by which to measure this distortion, including interception for second order products caused by components that behave according the to Square Law. Another commonly used method is third-order interception, which is employed for artifacts introduced by components that behave in accordance with the Cube Law. Such is where the argument for higher priced and better engineered precision test equipment gains considerable ground, as lossy audio interfaces and low-cost analyzers will obscure the results among that of their own distortion artifacts.

Passive Intermodulation Distortion

We have established that intermodulation occurs as a result of an active non-linear system which is consecutively reproducing two tones. However, Passive Intermodulation Distortion, or P.I.M, as it is abbreviated, occurs in un-powered devices as the result of the two or more power tones mixing in the presence of physical device non-linearities. Examples of such environments that provide a situation for injection of P.I.M include the junctions of dissimilar metals like metal-oxide junctions, rusty screws, even loose connectors and solder joints. Another example pertains to radio frequency controlled aircraft, where should two metal surfaces physically vibrate against each other they can broadcast pulse-width modulated signals captured by the parts and then re-distributed. Passive Intermodulation Distortion may carry enough additional noise energy to swamp lower frequency two-way transceivers. It is also pertinent to audio equipment.

The higher the signal amplitude, the more pronounced the effect of the non-linearities and the more prominent quantity of intermodulation that occurs. Depending on the two fundamental waveform tones present, their intermodulation harmonics may add and multiply to completely obscure the target frequency. This holds great relevance, as even though upon initial inspection a system could appear to be linear and unable to generate intermodulation. Hysteresis in ferromagnetic materials can generate passive intermodulation products when such materials are exposes to reversing magnetic fields, and thus is primarily the reason why gold plating over zinc solder mask is omitted in some designs. Passive intermodulation can also be generated in components with manufacturing and workmanship defects, again, such as insufficiently terminated and cracked solder joints, or poorly made mechanical contacts. Passive intermodulation cannot exist in ordinary designs in the absence of amplitude modulation distortion. In some instances, the active componentry is much quieter than the passive components being used in conjunction with them.

Phase Response

Phase Response is a means of representing the amount of change in phase that a device adds to frequencies as they are reproduced. Phase shift is expressed in degrees, and is used to describe the advancement or delay of phase in relation to the input signal waveform. A theoretically perfect amplifier with a perfect phase response would introduce an change in phase at all frequencies reproduced, thus having a net phase of zero. The unfortunate news is that nothing in our world is perfect, including amplifiers. The uplifting news is that we can design audio amplifiers with a Phase Response of single-digit values or better, where the effects are of little importance.

Phase shift and phase delay are often one and the same thing. Phase shift typically occurs at frequency extremes, and phase shift will typically be minimal throughout the mid-band frequency region. Most production home audio amplifiers have less than -10 degrees near 20kHz. This corresponds to a 1.4 microseconds (µS) delay period at that frequency relative to other lower frequencies. This amount of shift is mostly insignificant, even among the most demanding live audio applications.

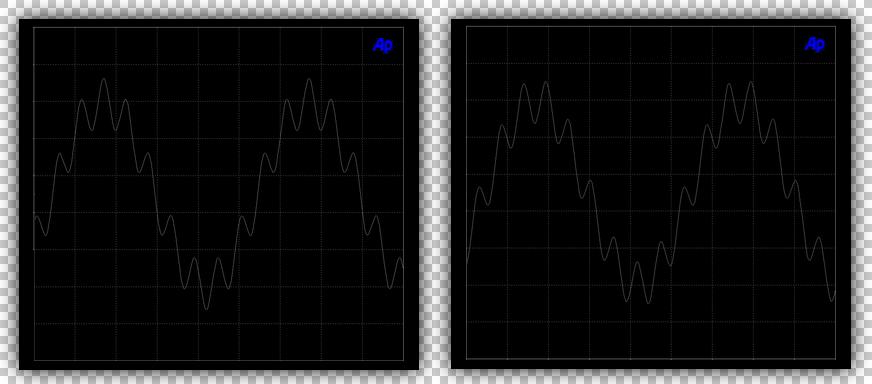

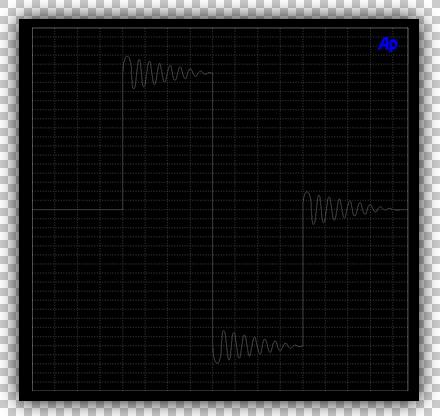

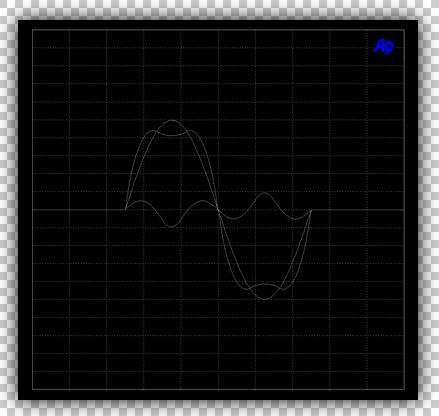

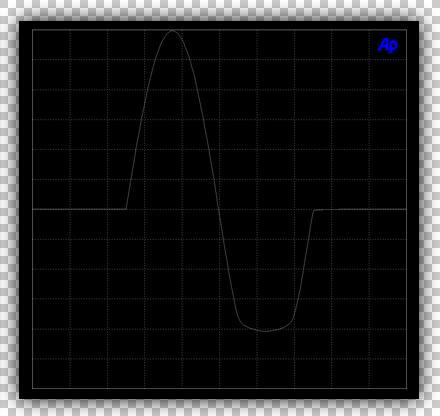

In the Figure above, two scope images have been captured. In the second image above, the effect of excessive phase shift may be readily depicted by the difference in the relation of the higher frequencies on a carrier. When this occurs during music playback, the timing cues fall out of sync and quality of timbres are changed.

It is much more common to see larger phase shifts in the lower frequency regions, as a function of the direct current blocking and high-pass attenuation circuitry. The phase becomes more problematic as frequency is decreased because, as signals descend the frequency scale within a decade, the shift in frequency timing becomes more audible to the listener. Amplifiers that display high quantities of phase shift also introduce large delays to frequency components that occur in the correspondingly higher phase regions. If we were to investigate the relationship between slightly higher harmonics and a carrier frequency of 20 Hertz with 10 degrees phase shift, the bass and harmonics fall out of timing by 1.4 milliseconds (mS) – much longer than the equivalent shift at 20kHz (1.4 microseconds [µS]) .

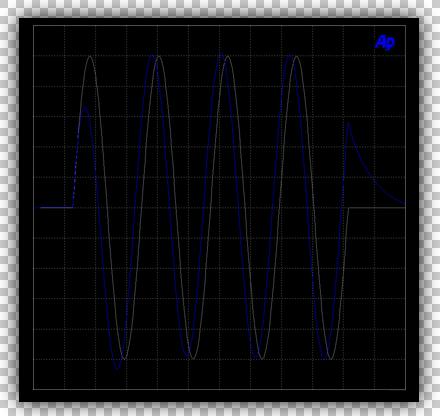

In the Figure above, there are two low frequency waveforms without any harmonics. These waveforms should be the same but, they are quite different. The grey waveform is the input signal, and the output signal is shown in blue. For this arbitrary circuit, the high degree of phase shift that is present leads to the gradual build-up in signal strength and additional distortion as the signal ceases. This is an example of an audio device that will demonstrate poor bass impact, regardless of how large its power supply is, and despite the fact that is measures mostly-flat with continuous sine signals.

In audio, a millisecond is a long time wherein many events can occur. One can pull a variety of audio components from the shelf to find phase deviations larger than this. An example includes hollow state thermionic valve amplifiers that may have as much as 60 degrees phase shift at 20Hz, which is associated with their passive cathode biasing and direct current coupling capacitor value choices. At 60 degrees and with 20Hz signal, the result is a8.35 millisecond deviation which leads to the notable attenuation and alterations to the waveforms. It invariably contributes to the destructive breakdown of the original harmonic and organic beat structures found in natural sounds.

Phase Response and impulse response go hand in hand, although they have been separated in this article to disambiguate their interpretations. Phase Response is also different than transient latency and physical delay, which will have their own section. Because Phase Response occurs in the frequency domain, it is classified as a linear distortion mechanism.

Absolute Phase

Absolute Phase, which is often confused with the term polarity, applies to the apparent direction of signal swing for a given period. In other words, an electronic component with Absolute Phase integrity would maintain the signal’s phase relationship. In this way, positive swings in equal positive swings out, in that order. In terms of a practical audio system, it can be more readily depicted through visual demonstration of low frequencies being reproduced by large loudspeaker with an adjacent oscilloscope, or other device to show the signal coming in from the source. For example, when a signal is on a positive swing, a system with Absolute Phase will cause a low frequency driver to accelerate in a forward fashion. A system with opposite phase will cause a loudspeaker driver to move in the opposite direction, even though the original source signal was on a positive swing. Phase measurements are taken at the device’s outputs and are then compared directly with that of the input.

“Phase is not only inherent in an audio system, but also applies to the recorded media, or other sources of reference. This is called Relative Phase.”

In consumer home audio, some electronic audio components have been designed with inverted phase outputs. This was a common case among hollow-state preamps and simplified solid-state methods of gain control, before the inception of lower-noise devices. In more recent times, designers have opted to preclude phase inversion, and in essence, nothing was lot in gaining Absolute Phase. The reasoning behind the ideology is sound that if the phase must be revered, it should not affect every source. Many people are unaware that their system may reverse the phase of the input signal. However…

Phase is not only inherent in an audio system, but also applies to the recorded media, or other sources of reference. This is called Relative Phase, and although not deserving of its own section it does warrant some mention. Not all recordings are phase correct, and this is particularly true of some vinyl pressings. As a result, some manufacturers include a phase inversion switch on their phonograph stages to allow the listener to decide what sounds best to them. While extensive studies and recording professionals suggest that the auditory system is insensitive to an asymmetric sinusoidal signal, it is also suggested by sources that this may be best left to the end user to determine.

Latency

Latency is a condition where the output signal that has been reproduced by a device is delayed so that it is no longer instant when compared with the input signal. Some analog circuits are constructed in such manner that there may be latency, thus we observe a distinct difference in alignment between those two signals. This becomes important when the audio, or other signal, has to be referenced against another live event, such as video. Digital audio processors introduce the greatest timing error, as any digital interface operates upon the principle of data sampling. The time that it takes to collect and process this data determines the apparent Latency. Latency is usually uniform across all frequencies pertinent to audio reproduction, and the gross difference occurs between events as a perceived lag in time.

Oscillation, Stability, and Phase Margin

The Stability of an amplifying stage indicates its inherent immunity to a condition known as Oscillation. Oscillation is the sustaining of a signal at the output after the input stimulus has ceased. Oscillation can be self-induced or result from an external factor, such as when a reactive load is placed at the output of an amplification stage. It is important that the chances of oscillation be mitigated, otherwise, the cause of the oscillation may build the signal magnitude to such point that it overdrives the amplifying device, possibly damaging it and the load.

Every device, be it semiconductor or thermionic valve, has an upper frequency limit where its phase reverses 180 degrees. This is a naturally occurring part of electronic and mechanical bodies. The first-order corner frequency for an active amplifying device may reside near one-Megahertz (MHz, million cycles per second), while that among logic devices may reside in the low Gigahertz {GHz, billion cycles per second). This pole causes high frequencies above it to attenuate at six decibels per octave, corresponding to 20 decibels per decade. It will continue to attenuate at this rate until it merges with another pole. This is a single time constant, and the device will continue to attenuate at this rate until it merges with another pole.

An amplifier with a single time constant and zero phase shift at unity gain (unity gain refers to no reduction or increase as the signal passes through the device, gain equaling 1) is always stable, but many active devices have at least two primary poles at unity gain. The second pole attenuates at a rate of twelve decibels per octave and 40 decibels per decade, twice as high as a single time constant. If the active device is among those who’s frequency response naturally drop below unity gain before this second pole is encountered, it will be unconditionally stable at any gain level. If the 2nd pole is encountered above unity gain a gain of 1, special attention will be required for some implementations. In a few moments we’ll take a brief look at how that gain is determined.

The stability of an amplifier is represented by its Phase Margin (P.M.) in degrees, where the stated value implies how great the normal-state phase is from the 180 degree point. If the amplifier’s phase margin reaches 180 degrees by a shift that is superimposed back onto the input through parasitic paths or design errors, this condition will permit oscillation. Phase Margin is also indicated by what is called the k-factor. A K-factor of 1.0 is a boundary condition for unconditional Stability, and a device that has a k-factor of less than 1.0 is only conditionally stable. An amplifier with a k-factor of 1.0 will not oscillate, regardless of the signal being applied, the source impedance, or the load phase angle.

A device operating without negative feedback is known as open loop. This condition offers the highest possible resistance between the input and output of a device, and little to no interaction. It results in limited high frequency response, high levels of distortion, and gain which is often to high to be useable in audio applications. Feedback can introduce some levity upon the situation to greatly improve several parameters of the device, and it works by trading gain for higher linearity and lower distortion.

When a conductive or resistive path is placed between the input and output, it is known as a closed loop. For most audio applications, a localized negative feedback loop will be the most common type of feedback, featuring a closed loop with a resistive or conductive medium to set the desired gain. As the resistance is decreased, more feedback is applied and the gain is reduced. The bandwidth increases and the distortion decreases.

Feedback is an often confused concept, as it does have a learning curve. Many believe that it is the act of taking the output signal of a device and sending back to the input. This is incorrect, as feedback is a method of taking the output Voltage, or portion thereof, and presenting it back at a devices input. While these two concepts appear identical, they are not exactly the same. This distinction becomes most important as one advances.

With enough feedback, the amplifying device may reach the device’s 180 phase shift point. To allow the device to safely be used high feedback, a small value compensation capacitor, called a Miller capacitor, may be placed in parallel to the resistive feedback loop. This is used to cause the circuit to act as a phase advancer, and attenuates the high frequency gain. In doing so, it helps to keep gain at ultrasonic frequencies from reaching critical symmetry, where the feedback would otherwise become positive and produce some level of oscillation and distortion.

We know the net result of feedback is a flatter transfer gain over the designated bandwidth, much lower distortion and Miller capacitance helps prevent instability. Attenuating high frequencies or moving the poles upwards in frequency allows more feedback to be applied, and thus, improved linearity. However, there is an important balance that must be weighed in to ensure that high frequency integrity is not compromised. There is a point where a given amplifying device performs its best, and where too much feedback for specific devices will require excessive compensation, compromising the slew rates.

On your audio journey, you may have heard mention of something called Zero-Feedback. Zero-Feedback is often used in the audio industry to claim an audio amplifier as being bereft of any feedback. It is both a misnomer and erroneous descriptive mechanism for how the amplifier is designed and operates. What perhaps should be stated in its place, is that the design uses near-zero decibels of X-type feedback or zero global feedback, which ever is applicable to the design. This is because there are clear feedback paths on the schematics. Several others in the industry have also echoed that each one claimed to be bereft of feedback had been quite the opposite – those designs simply traded global feedback for localized loops. Others make use of a closed loop, but where the high resistance value brings the gain close to open loop, they label it as zero-feedback as though that were constituent of zero-feedback circuit.

The single notable exceptions are a couple transconductance designs that have gone and resurfaced over the years. They utilized autoformers for voltage gain, feeding a pair of Jfets or Mosfets. These still use passive or active degradation, which in itself is a type of feedback. In these designs, the absence of negative feedback comes at the expense of distortion, poor output impedance, and a very limited bandwidth. The scientific engineering fact of the matter is that any amplifier that uses a non-zero resistance, or impedance on the cathode, emitter, or source drain, is by definition using feedback. For example, single-ended Classification A amplifiers mandate the use of a passive and sometimes active cathode or emitter resistance (sometimes in conjunction with a capacitive reactance to entice improved low-frequency extension) to place the Q-point approximately half way along the load line. This constitutes degenerative feedback. Should there be no resistance or impedance, the amplifier no longer reproduces audio waveforms.

There are also a wide spanning variety of different feedback topologies. Not all feedback is the same, nor serves an identical purpose, and all must be executed intelligently. Among the varieties there is positive feedback, negative feedback, thermal feedback, voltage feedback, current feedback, feedforward, global feedback, ERCO, and the list goes on. Every production amplifier uses controlled feedback to improve its linearity and stability. It also greatly reduces the output impedance of the circuit, making it more better suited to driving changing loads. High Current is actually a misnomer for high feedback.

Another important area of stability in amplifier design brings us to the output portion and the load that it is connected to. The amplifier interacts with a non-linear speaker load having a reactive impedance which changes with frequency. Given the situation of impedance, phase Theta is the time difference between the peak voltage and peak current. In other words, impedance is the phase difference between the voltage and current. The impedance of a speaker cannot be both inductive and capacitive at the exact same frequency. Instead, it will vary as frequency is altered. At resonance, a driver is resistive; below this point it is inductive, above this point it will be capacitive, and eventually series inductive once again.

The more reactive that a load is, the greater the margin of misalignment between these two prime constituents. An amplifier will have a degree of sensitivity to the phase of the load it is driving, and as the phase approaches full reversal, it will begin to ring. In addition to thermal stress, a high degree of phase shift is the the most challenging load faced by an audio amplifier. It can result in heavy waveform deformations and even lead to an amplifier’s failure. Despite marketing commentaries to the contrary, irregardless of how powerful an amplifier is into any impedance load, its stability remains independent of that power. The only connection is that high feedback promotes a low output impedance, while the stability of that feedback is still separate.

One final part to mention, is that the power amplifier is connect to the loudspeakers via a long cable. Therefore, any parasitic radio frequency interference that may be picked up by the speaker cable may find it’s way back onto the amplifier. External causes of instability originate from the interception of a frequency corresponding to an existing pole, or symmetrical phased electromotive forces. An amplifier that does not naturally attenuate radio frequency interference on its own requires a low pass filter, or the isolation of critical components to prevent radio frequency interception. Radio noise commonly finds its way into high speed amplifiers, either on the input leads or on the loudspeaker cabling. From there, it progresses onto the power rails and may present a loop. Several forms of isolation, such as shunting with capacitance, can prevent the introduction of transients and other undesirable signals from being intercepted by a gain stage. In larger power amplifiers, the requirements of a small value output choke is often mandatory, whereas in smaller power amplifiers or voltage gain stages, it may be omitted in some designs without repercussions.

In closing, the phase margin for stability is rarely provided among consumer audio products. It is sometimes believed that amplifiers oscillate when they clip, but this is not the case.

Frequency Response Function

Often abbreviated as F.R, Frequency Response is a quantitative measure of how precisely an electronic device under test maintains a constant output signal amplitude over a bandwidth, while the input reference signal remains unchanged. Among scientific bodies, Frequency Response Function is also often referred to by the name Transfer Function. Deviations from a flat frequency response occur in the linear distortion domain, and gain flatness indicates the variations in the device’s static gain behavior over a stated frequency range.

When a recording is produced, it is assumed that a linear playback system will be utilized, so that it is capable of reproducing the same energy at all frequencies in accordance with our own auditory system. A flat frequency response is indicative of the overall quality and ability to respond to upper and lower harmonics of signals, all the way to the extremes of the audio spectrum. In order to reproduce music with a degree of faithfulness, it remains important than an audio system be bereft of any traits of accentuating, nor attenuating the frequency response in the audio range. However, there is nothing wrong with a listener preferring to tailor the response to their preference.

Because extreme stability is necessary for some types of sound applications, some manufacturers have made provisions for early filters to restrict the frequency response, or have allowed for relatively high distortion in return for increased amplifier stability. This is because electronics devices have a specified bandwidth in which they will offer their peak performance and safety, and it just happens that the human auditory spectrum extends over a region where that bridges both low and high frequency devices. However, the later half of the 1980’s saw the introduction of the next generation of semiconductors and those which not only replaced all the forebarers; but have remained the basis for essentially all modern devices used in audio, many of which are still in production.

What has changed since that time has been that newer iterations of obsolete devices have become more efficient and offer lower noise. Among a variety of parameters, they offer a lower input capacitance and higher safe operation points. As a result, they are more flexible in usage, can handle greater thermal and operational stress, and are easier to drive with less demand upon requisite componentry. Even so, it has been fully possible to obtain excellent frequency response for several several decades. The frequency response of an audio component will, however, be limited by the designer. All finished amplifiers that employ devices in a closed loop manner will feature a low-pass filter, be it passive or active. This is to improve the safety of the amplifiers, and to prevent them from self destruction, or acting as a radio frequency transceiver, ie: a radio receiver and broadcast transmitter.

Where any situation arises that an audio reproduction amplifier has no active means to suppress such signals, it would be increasingly dangerous to allow such a product to operate in a manner permitting a high frequency signal (that corresponds to upper poles) to make its way onto the input. Equally concerning, would be the introduction of a lower frequency (for example: 200kHz-1MHz) radio transmission upon the amplifier’s signal input interconnects, or internal traces, then being amplified out of phase and having that voltage signal on the output superimposed back onto the input. The lower frequency limits of an audio component may also be limited to attenuate power-robbing subsonic frequencies, or to mitigate direct current transients from damaging the loudspeaker system. A key designer with experience in signal processing will be able keep the frequency response flat, typically within a couple decibels from 20Hz to 20kHz and beyond.

In most cases, upper frequency extension to 100kHz is common and this is more than adequate for music and theatrical audio reproduction. This frequency is several octaves above and beyond the limits of the perceivable audio spectrum, due to the mass of the tympanic membrane, the hammer and anvil, their shape, and the genetically designated operation of the cochlea. Also, this is far beyond the scope of analog and digital audio recordings that are available, as per the mechanical, electrical, and Nyquist theorem; the maximum possible frequency is one half a digital sampling rate, and aliasing distortion becomes prominent prior to this being realized, thus necessitating integral filters.

The real Frequency Response will vary from the asymptotic response as a direct result of the small signal capacitor’s (where applicable) inherent high equivalent series resistance. The compact cost-saving surface mount capacitors in modern gear may have a stated capacitance of as much as 10uF, which depending on the following circuit impedance, may be more than sufficient. What is important to note, is that under certain thermal conditions the effective value decreases to as much as one-tenth that original measured value. While it is difficult to validate the use of capacitors that cost thousands of dollars, there is an argument for poly film types, and that they are reliable, display lower distortion and have better consistency with thermal rise. This is a condition in some applications which may not be consistent, or monotonic. The flip side of this situation is that larger value capacitors require a greater quantity of energy to sustain the charge-discharge cycles of an alternating current signal, exactly like we find in audio, for example.

With proper filter points chosen and phase distortion greatly reduced, a new problem presents itself. We can ascertain the output, or a leading stage is AC coupled with a capacitor by the presence of residual DC after the signal has stopped. One would ask themselves, “How could a direct current make it through a DC blocking capacitor?” The short answer is that whilst the capacitor is charging it has to pass current. During that charging cycle, the zero-offset point is disrupted. A concern unique to AC coupled monolithic systems stems from these apparent duty cycles wherein the crucial center reference point should have a potential of zero Volts; an AC coupled stage can introduce headroom issues as low frequency bandwidth is increased, since half the waveform will shift from zero offset and may exceed the clipping threshold with dynamic signals at these low frequencies.

A skilled engineer can decrypt a great deal of pertinent information regarding the design of a component, based on this criteria set. These include nodes and modes, phase margin, stability and other concerns, and they can also be derived from the slope types. Certain behaviors are indicative of the topology, and they can also serve as indicators of other aspects of reproduction fidelity, too. A response that begins to heel within the audio bandwidth is a sign that there will be phase shift associated with those frequencies, where a non-linear slope that is shaped or steeper than six decibels per octave would be descriptive of a multi-pole filter, usually achieved via successive staging.

Power Bandwidth

A device’s power bandwidth relates to the frequency range that it covers at a stated power level, usually stated in Watts or Volts depending on the application. A frequency response measurement is captured at the relatively low power output of about one Watt, known as small-signal bandwidth. The Power Bandwidth of a product contrasts to the small-signal analysis, and it is measured at the half-power or full power capacity before the onset of clipping. The Power Bandwidth measurement provides a reliable and accurate means by which to quantify an audio device’s ability to produce high levels of output over a wide frequency range. The limits of the power bandwidth are defined by the points where the product can only produce half the power that it was capable of at one-thousand Hertz, ie -3 decibels. In a well conceived design, this will reside far outside the audible regions.

“While some may associate that sound with under-built power supplies, it is rarely the induced by such. It was often the result of employing early low frequency devices while reducing the number of successive stages, and operating those devices at too-high gain product levels.”

If you have ever encountered a vast reduction in the bass or treble levels of a system as volume was increased, you may have experienced the audible artifacts stemming from the limits of the Power Bandwidth. Sometimes abbreviated P.B. or G.P.B for Gain Power Bandwidth, it relates to behaviors wherein as the device’s output level or gain is increased, its frequency response narrows conversely. This is because the frequency response of a conventional transimpedance amplifier is inversely proportional to the gain. As the output level or gain is increased the bandwidth is subject to reduction, and this can be depicted with what is called a Bode plot.

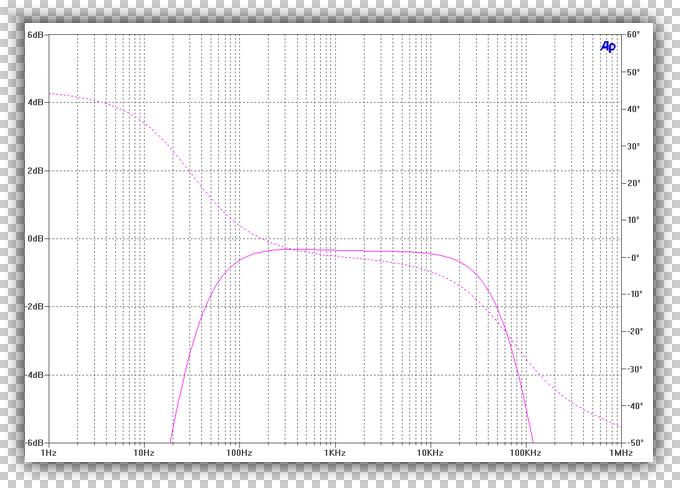

The above Bode plot illustrates the open and closed loop gain and Power Bandwidth for three different feedback levels. The higher line that intercepts the Bode rate of rolloff depicts the least quantity of applied feedback (40dB) offering eighty decibels of gain, but also narrow full power bandwidth (Fp) of 1kHz. The next line down shows the 60 decibels of feedback, providing 60 decibels of gain an Fp bandwidth of 10kHz. Finally, the lowest intercepting line depicts eighty decibels of feedback and forty decibels of gain, offering a Fp bandwidth of 100kHz. As more feedback is applied, gain is traded for improved linearity and frequency extension. However, if the bandwidth becomes so wide as to intercept the device’s upper resonance pole above a unity gain of zero decibels, and the rate of attenuation exceeds twelve decibels per octave, compensation will be required for stability.

In the earlier days of solid state, devices displayed a narrower Gain Power Bandwidth. What that meant, was that if the device was used without feedback, or was operated at high levels with low feedback, it would be entering the power region where its associated frequency response was the narrowest. As a result, this could cause the crescendos and dynamic swings to fall short of their proper frequency spectrum and even distort, resulting in the sound becoming compressed and constrained. While some may associate that sound with under-built power supplies, it was rarely the induced by such. It was often the result of employing early low frequency devices while reducing the number of successive stages, and operating those devices at too-high gain product levels. In other words, it was not the collective fault of the power supplies. The later updated wide-band high gain versions in the 1980’s made Gain Bandwidth Product issues in audio equipment design an obsolete topic, but caused some new troubles for a number of audio companies that were not prepared to take full advantage of the high bandwidth. That was readily evident in their written disclosures, if not overtly apparent in their design choices.

The power bandwidth of a properly engineered amplifier, or other gain stage is quite linear from 20Hz to 20kHz from a fraction of a Watt to full power. In some cases, it approaches one-Megahertz before a low-pass compensation filter is installed in the feedback loop, or elsewhere. The response should remain constant at audio frequencies, regardless of power output. Taking the example of an amplifier for instance, wide power bandwidth means that the device can reproduce high level upper harmonics within a signal at any power level as easily as it can reproduce mid-range fundamentals. The end result of a properly designed amplifier with a good Power bandwidth it that the listener gets full power-performance from the product over the entire audio frequency spectrum. It is especially important when the amplifier is called upon to reproduce musical material with high energy over a wide frequency range, where perceived sound quality on dynamic passages may otherwise become compromised.

Gain Factor and Small Signal Gain Linearity

Gain is the relationship that exists between the relative signal magnitude found on the output of a device compared to that which exists on its input in the same instant. Audio equipment, including phonograph amplifiers, preamplifiers, and power amplifiers, each have a quantity of gain that can be represented as the ratio of voltage signal multiplication as waveforms are passes through the device. This multiplication is the increase in level and is known as amplification. Amplification can also take place in the current domain while at vanishingly low voltage potentials, however, very few audio components are designed to operate in this manner. The final value of signal multiplication may be given as a Gain Factor ratio that compares the output to the input, or, simply represented as the amplitude in decibels. The latter is much more useful for the general public, as it relates to something that everyone can relate to, respectively.

In this modern age of home audio reproduction circuitry, Gain Factor is usually a fixed value. Power amplifiers often provide twenty-five to thirty decibels of gain, enough to preclude any need for a preliminary stage of amplification and allow them to be driven to their maximum output power. Control over the output level of amplification is adjusted by a potential divider network that has been integrated into an early stage prior the power stage, and as mentioned, this early stage may not offer any gain. Sources, such as compact disc players and phono amplifiers normally operate at the one to two-Volt levels. These are typically greater than what is necessary to drive most power amplifiers into hard clipping. Lower level sources and quieter recordings may require more gain to achieve the desired playback levels, and these are the primary reason why the vast majority of discrete preamplifiers do offer active circuitry and gain.

Throughout the history of consumer audio design, there have been cases of products which operated under conditions that precluded them from being capable of providing linear dynamic reproduction. What these devices did was mute, or otherwise compress the apparent signal while remaining bereft of any signs of clipping. The two primary scenarios can be decomposed into the following: muting of small signals, and the compression of dynamic passages. The former instills a loss of information that comprised the finer and lower-level transients in a recording. The latter encompasses a situation wherein the peaks above an unspecified margin are reduced in amplitude. Building upon this second deviation from gain linearity, there may have been provisional instances of small signals being left unchanged and the proportions maintained perfectly, yet dynamic range reduced. This peculiar form of gain linearity distortion made smaller sonic traits appear louder, leading to the impression of great detail, while maintaining a very limited dynamic range.

There are two main types of amplification; current and voltage. Voltage signal amplification is the most common and remains the simplest. Current amplification is a means of signal transfer which largely diverts the problems of stray parallel conductor capacitance in long runs. Provided that the signal path can offer a consistently low resistance, there is little signal degradation. It works by feeding stages with a a current signal at resides near nil Volts. If one were to attempt to measure it with a multimeter, it likely would behave as though no signal was present on that line. In order for current flow to exist, there has to be a voltage. In the case of current amplification, it is simply such a small value that it escapes detection by typical low-sensitivity measurement gear, such as multimeters. This very small value of potential is not unlike that amongst digital logic circuitry and digital to analog converters, and maintains this current flow integrity through the use of low resistance circuit networks.

At some point, preferably closer to the final stage, the input signal must be converted into a voltage for it to be useful. This is really quite simple and only uses a single stage, such as an operational amplifier with feedback that serves as a transimpedance converter. The operational amplifier is the most common method of conversion due to its inherent precision. The line input to the stage is taken at the negative-designated input while the positive input is shorted to a ground plane. Any current flowing into the negative input will have to flow through the feedback resistor, which will develop a voltage across this resistor as per Ohm’s Law. Operational amplifiers are designed to prevent any differences from occurring between their positive and negative inputs, as they are effectively a V-to-I converter that cascades into an I-to-V output stage. The negative input will effectively act to mirror the positive input, which means the negative input is effectively grounded as well. If negative input and output are indirectly connected through a resistor, as with feedback, the output stage must compensate for the voltage drop across the resistor. Voila, thus converting the current into a proportional voltage signal for amplification.

This can also be accomplished with a vacuum tube, but it is important to maintain a high interstage or source impedance. Where most audio components strive for low source impedance values, a tube (such as a triode in an I-to-V stage) requires a high source impedance to enough permit freedom for the grid to move. Designing a high impedance source has never been a difficult task. Simply increasing the driver value of the cathode resistor in a grounded cathode triode amplifier will place the impedance very high.

“Not all of the various types of distortion exhibited by electronics can be demonstrated by sinusoidal test tones, nor under steady operating conditions, alone. It has been devised that during years of audio component engineering and research design, this has been one of many great lessons. An interesting portion of this casual article may be that of the dynamic classifications of distortion, as they demonstrate that static measurements do not always reflect the complete behavior of the dynamic modulus like we encounter in music reproduction.

In other words, the tests we see online and in brochures are those of steady-state sinusoidal tones, and they focus more upon the behaviors that are only seen as a signal continues onward. However, musical signals initiate, cease, or otherwise change. No two arrangements behave in an identical manner under all conditions.

All audio waveforms are comprised of carrier waves and additive and subtractive components, and therein the alteration of shape, proportion and frequency forms the final dynamic audio product. Those measurements serve to better reflect the way electronic products reiterate audio waveforms. In light of this, it is interesting that even in this day in age, some still assume that two to three static tests are descriptive of the device in its entirety. Furthering this paper, we will now enter the topics of more intricate forms of Dynamic Distortion. Whilst several of these distortions are most pertinent when applied in the context of physical mediums, each also holds credence in electronics engineering.”

Amplitude-Frequency and Phase-Frequency Distortion

Some types of distortion act differently, depending on whether the fundamental frequencies are continuous, limited to a single cycle, or even portion thereof. As such, distortion analysis based on test tones overlook a great deal of behavior exhibited by the device, leaving much of its performance and sound a mystery to the uninformed. None of the following are represented in a typical F.F.T through a tone test. The following is a small compilation of test results and the classification of each.

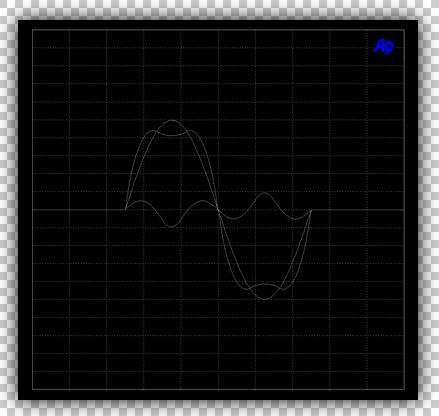

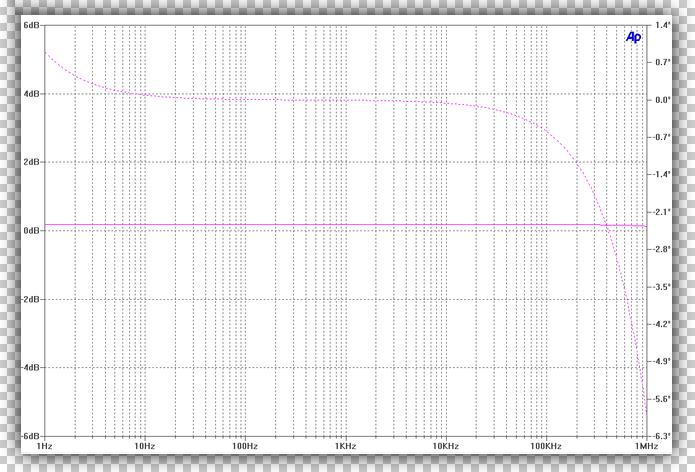

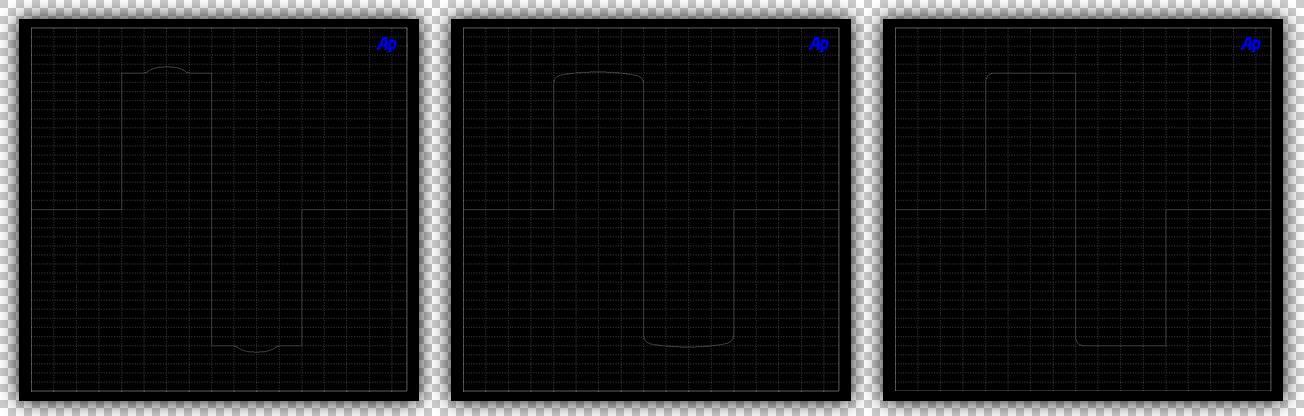

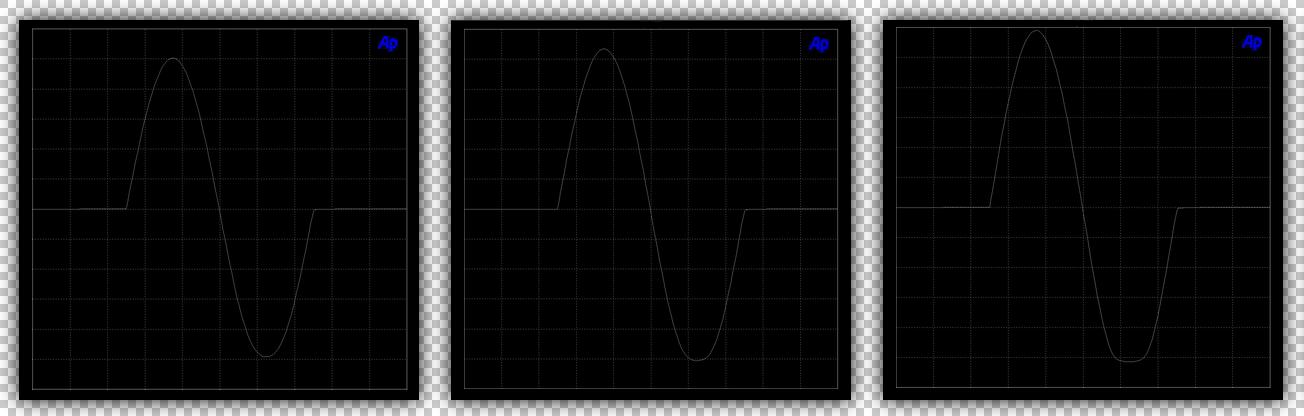

The Figure above displays Amplitude-Frequency Distortion, Phase-Frequency Distortion, and the cumulative results of phase shift upon a tone spanning several cycles. An example of Amplitude-Frequency Distortion is present in the first image. This type of distortion results from form of change that typically involves a half waveform, or an otherwise sinuous transient, who’s amplitude is inceased or decreased. Meanwhile, the timestamp is also altered to change the frequency. This is a complex distortion, and it completely changes the original transient content to such extent that it no longer resembles the original signal.

Much like Amplitude-Frequency Distortion, Phase-Frequency Distortion is a situation that arises when a circuit imparts a difference in apparent phase, resulting in a modification of the impulse’s time span. After arriving at the distant end of an audio circuit, the currents require time to build to their intended value. However, if conditions dictate that the time envelope needed is far too great, they may never reach their previous levels. This of course results in the attenuation and distortion of the signal’s initial & terminating pulses. It is possible to have a device or circuit that exhibits both the traits of Amplitude-Phase Distortion and Frequency-Phase Distortion sanctimoniously.

In the third Figure, we can see an example of what phase can do to a complete sinusoidal waveform. The initial point of 90 degrees fails to reach its intended peak magnitude, and where the out of phase signal ends without completing its full cycle, it ceases with an abrupt transient spike. While this can happen at any point over a device’s bandwidth, this type of behavior is most often present at low frequencies. Anywhere there is a low or high pass filter, there will be some phase shift.

In many commercial and DIY designs where undersized coupling values are chosen to lower build costs, or simply because they are deemed adequate, the above Figures display the results. Bypassing the small film signal capacitors with 10µF, 27µF, or even 100µF electrolytic types goes a long way to reduce this aspect of phase distortion. Have no fear of the electrolytic polluting the signal or otherwise mitigating the purpose of the smaller and higher quality primary film capacitor, because the equivalent series resistance and inductance of modern films is far lower than that of electrolytics. Thus, the electrolytic is more resistive, and thus only active where the film capacitor was otherwise ineffective – at low frequencies.

Amplitude Modulation Distortion

Amplitude Modulation is technique used in signal broadcasting and pulse width modulated means of amplification. There are three primary types of amplitude modulation distortion involve superimposing a carrier signal upon the fundamantal signal: This signal may maintain a constant dominant tone and vary in amplitude proportional to the fundamental signal; it may operate at a constant dominant magnitude and wave in frequency with the input signal; finally, it may track the input tone, following as a small signal anomaly.

The first Figure above demonstrates an example of Amplitude Modulation Distortion acting on a square-wave primary tone, as previously mentioned in the section pertaining to harmonic distortion. The second Figure demonstrates a third-order harmonic that modulates in amplitude at the same frequency as the fundamental sinusoidal waveform. Where audio is ever-changing, the effects will be more pronounced with some music and media than others. Adding this factor to a change in phase of different harmonics, the cumulative effect can be dynamic.

Any time that amplitude modulation is not part of the primary design goal, it should be treated as a parasitic effect and the source soon after located and the cause rectified. Amplitude modulation can apply to any order of harmonic distortion, where the harmonic contains an amplitude modulated sample of the fundamental frequency. In this case, the rise and fall of the waveform remains close to the original, but the crest is suppressed by the out of phase modulation.

Transient Response

Transient Response encompasses important aspects of audio reproduction and design. The term is broad, but implies the importance that a device under test be transient tested to help quantify its fidelity in recreating stochastic multi-state trajectory, in combination with other signals. There are a number of tests for Transient Response Distortions, encompassing large and small signal tests and multiple tone signals, and a variety of methods that demonstrate how the subject behaves under dynamic conditions. Transient analysis differs from other tests, because it employs specialized methods and a variety of stimulus which may not recur, whilst harmonic and intermodulation distortion capture techniques centralize upon steady-state ongoing sinusoidal waveforms.

The primary reason for Transient tests is that all sounds and musical signals have both a beginning point and end, sometimes abrupt. The criteria for these signals is constantly changing in terms of amplitude, frequency content, beats, modulation and the additive and destructive interference of such contributions. I other words, music and natural sounds contain distinct transient events which are both brief and quite asymmetric in shape. There are a number of different tests for transient response, and each is based on creating data when a device is requested to rise or drop from a potential of zero to a new set point of magnitude, possibly even maintain that level there for a period, then reverting back to zero potential. A competent and experienced engineer can gain great insight about the circuit topology, the mode of operation, the nature of filters and other important behavioral characteristics of a device. These can be determined based on comparison of the results with known performance criteria.

The secondary reason for testing Transient Response is spearheaded by the fact that many stages of signal processing and amplification behave slightly to vastly different in their ability to handle impulses, square and triangular waveforms. While very few soundtracks contain perfect square waveforms and the sound of such is of little concern, it is not so much the reproduction of a square wave that is always of interest the prudent designer. Instead, it is more often what exactly is added during the inception of those impulses or waveforms. Any additional tones or pulses that this stimulus may spark great interest and help the engineer to build a better sounding, safer device.

Impulse Response Function

Impulses are short duration transients, meaning that the events are a brief occurrence. There are a number of techniques for the analysis of impulses, and amplifiers are generally treated as discrete-time systems, rather than introduced to a continuous cycle of impulses. The electrical transient comes from a precision calibrated source, such as a laboratory function generator and comparator. The intent is to create a fast rising pulse that sharply squares off at its peak, then falls equally fast to zero potential. The value of this test is realized in the observation of how a device under test behaves immediately leading up to and following the impulse.

Impulse Response Functions, abbreviated as I.R.F, are common in dynamic power testing of amplifiers, but is also a good tool for identifying problems with small-signal reproduction which could lead to a perceived loss of sonic information. The Impulse Response Function of a device can be derived by comparing the resulting output impulse to that of the input with simple algebraic expressions, such as the Kronecker delta function. Impulse test results are also useful in quantifying the devices isolation and immunity to preceedence effects, phase response, slew-rate, and tendencies toward ringing which may be the harbinger to a later problem.

Overshoot and undershoot provide data on stability, and amplitude deviations such as rounding of the impulse share information about phase error. Because the impulse is so brief, the results can be helpful in identifying aberrations that may be missed with other transient signals spanning a greater time domain. Dynamic transient spikes ocurring during an impulse test can stem from the physical placement of parts components on a printed circuit board, of point to point wiring. One of the more prominent offenders is trace and conductor inductance. Parasitic inductance can be mitigated through careful layout and circuit routing.

Rise Time Distortion

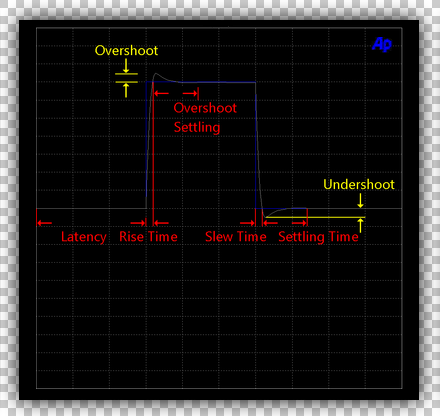

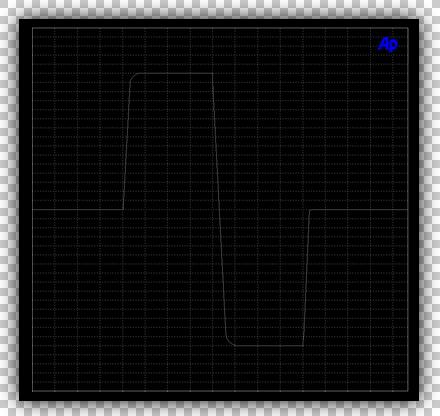

Rise time is a measurement of the amount of time that a device under test requires to respond to a square wave which, one alternates at a specified frequency at a predetermined level. The rise time of an amplifier is an indication of its frequency response, and is telling of what kinds of filters are in use. A fast rise time corresponds to a wide frequency response, and a rise time that is rounded or tilted over indicates that a portion of the circuitry comprises of a low-pass filter.

For audio equipment, Rise Time Distortion is referenced with a 1kHz square wave signal of one volt peak-to-peak amplitude at an amplifier’s output. It is determined by the duration required to change from 10% to 90% of its output, ie: 0.1 Volts to 0.9 Volts. The initial and remaining 0.1 Volts are excluded in the test to improve accuracy of this single test. Otherwise, any non-linearities and secondary emissions present in the device or source signal could lead to erroneous results in measurement. Rise Time Distortion is related to Slew-Induced Distortion, with the fundamental differences being the input reference levels, and that Rise Time Distortion ignores the first and last 10% of the waveform. A general example of this is depicted in the first image of the Transient Distortion section, above.

Unit Step Function Response

A Unit Step Function Response closely resembles the transient response square pulse tests, but differs from it in one important way. A Unit Step Function Response test dictates that the signal not immediately fall back to zero potential. Instead, it is a fast rising direct current voltage signal that climbs and holds its valve for an extended period. This test proves useful in assessing stability and how a device under test behaves when the signal becomes a direct current. This can help assess the safety of the device, and a direct current could happen if an amplifier was connected to a preamplifier with a sudden fault condition. A properly designed amplifier will not reproduce a direct current signal, and instead will reject it. If it did reproduce the DC signal, it would not only put itself at risk, but also damage the loudspeakers and present a fire or shock hazard.

Slew Rate and Slew-Induced Distortion

A device’s ability to follow a fast falling waveform defines its Slew Rate, and this measurement is taken at higher levels than the rise time distortion measurements. Abbreviated as S.R, Slew Rate tests are done using singular square wave tones, where Slew-Induced Distortion is identified as a tilting of the rise and fall times. Slew-Induced Distortion, which is abbreviated as S.I.D, is the forerunner to transitory intermodulation distortion with the input of multiple tones, since upper level harmonics would then deviate from their original transient carrier alignment. It is also responsible for insufficient high frequency performance.

During the section discussing harmonic distortion, an important portion had uncovered the fact that higher order harmonics act to flatten the crests of sinusoidal waveforms. While the auditory system does not permit a human to hear high frequencies much beyond 20kHz, it may have the special ability of identifying rise times associated with initial harmonics slightly above this point. These fast rising square waveforms are tied into the human response system of fear and shock. In light of this, they are not germane to musical spectra, and the only commercial playback medium that may contain such information and has an appropriately high filter to permit it’s reproduction would be 24/192kHz digital. Analog is also capable of capturing and reproducing high frequencies, but one would be hard pressed to find any material on the shelf extending to frequencies and dynamic levels required to truly test an amplifier’s slew rate. As for the recordings, high frequencies are the lowest power constituents and rarely reach high levels, compared to the mid-range region and low frequencies. Live performances are also often also performed with a filter limiting their Slew Rates.

A Slew Rate permitting a bandwidth into the high megahertz is not requisite to building a reference sound quality amplifier. What this does entail, is the careful application of design principles to ensure that Slew-Induced Distortion resulting from bandwidth limiting is minimized at all levels. Three-hundred and sixty degrees of a 20kHz waveform corresponds to a fifty microseconds time span. Both the rise and slew times each comprise one-quarter wavelength, and are twelve-and-a-half microseconds length. It is typically a good practice to design amplification stages so that the highest audible frequency being targeted is approximately one-fifth of the half-power point of the amplifier, itself. For a goal of 20kHz, bandwidth attenuation would begin at 100kHz, ie: -3 decibels at 100kHz. This is done to place all the filtering out of the audio range and allows it to carry all the important information with minimal Slew-Induced Distortion.

To reproduce 20kHz accurately at five Watts into an eight-Ohm load, a slew rate of 0.8 Volts/microsecond is needed, and at two-hundred Watts the required slew rate is 10 Volts/microsecond. Many amplifiers are capable of 45 Volts/microsecond slew rates and higher, and although these slew-rates are needed for maximum power, the upper frequencies in recordings and nature require the least Voltage to reproduce at an equal amplitude. Combined with the ten-fold increase in sensitivity of high frequency loudspeaker drivers, Slew Rates above these values are of limited value.

Dynamic Intermodulation Distortion

This classification has not been accepted as an official recognized terms among scientific engineering entities and only appears in audio literature. However, it is mentioned here because the name Dynamic Intermodulation Distortion is used as a quick and casual term for intermodulation distortion dynamic range. It can be useful in describing intermodulation artifacts in radio frequency amplifiers that display traits of varying in levels, while an input level remains a constant factor. The proper term is intermodulation distortion dynamic range.

Intermodulation Distortion Dynamic Range

Intermodulation Distortion Dynamic Range, often abbreviated as I.M.D. D.R, pertains to radio frequency amplifiers. Much like noise, intermodulation that occurs at high frequencies may be subject to slight variances in the same manner as noise. Intermodulation Distortion Dynamic Range is a statistical means of determining a range of relative level to how great the intermodulation artifacts may vary. It also helps the designer define a better intercept point, therefore improving measurements depth and accuracy.

Transitory Intermodulation Distortion

Transitory Intermodulation Distortion, which is abbreviated T.I.D, is a descriptive term for changes in the transient relationship that occur with two high frequency, high amplitude signals which coincide within very narrow time spans. Where it differs from the other transient distortions, is that Transitory Intermodulation Distortion describes a case of there being more than one tone present, and the alignment of them holding prime importance. In such sense, the term Transitory is pertinent to both a waveforms initial rise & terminus phenomenon. In the literal sense, it describes the intermodulation and production of short, new secondary emissions that can occur when an amplifier’s slew-rate is impeded, thus causing a sufficiently high frequency signal to become pinned to the slew trajectory because it cannot follow the desired rate of change. This distortion only occurs as a convolution between two expressions, ie: as frequency is increased, and the inherent input signal’s rise and recede time decreases to a duration shorter than an amplifier’s own linear square-wave response. Open-loop T.I.D may occur at radio frequencies and in circuits that operate into the high MHz and GHz, as the result of parasitic feedback capacitance or stability compensation.

The origins of testing for Transitory Intermodulation Distortion date back to Roddam during the 1950’s. Testing for T.I.D benefits the engineering of radio communication equipment, which may be tasked with carrying multiple tones at an instant. It also aids in the reduction of gross errors in high speed logic. To determine if T.I.D poses a problem in the scope of a collective circuit, two or more function generators are used. Typical analysis involves two of several primary forms of stimulus: a symmetrical square waveform or string of square pulses, a sinusoidal waveform, a saw-toothed waveform, or a beat at a lower amplitude and much higher frequency than the square waveform; the frequencies of which must have the same begin and end points for each swing of the square signal. These frequencies can be much closer, or are spread farther apart, and are commonly mixed in a 4:1 to 6:1 ratio. The signals are mixed down, resulting in a final complex input signal that will cause any inherent problems to surface.

In practical high power high-frequency applications, Transitory Intermodulation Distortion is characterized by failure to reproduce the sharp signal slopes of a square wave that simultaneously serves as a carrier for a second higher frequency. The increased time span of the square wave to rise and fall and results in the misalignment of the upper frequency tones carried upon the fundamental fast-rising lower frequency square wave. Portions of these tones then fall onto the rise and receding walls of these slopes, and the modification at occurs at each transition leads to the growth of new intermodulation products spaced at wide intervals. When present, they is most appropriately described as the result of a slew-rate induced artifact.

Transitory Intermodulation Distortion cannot exist in the absence of slew-rate induced distortion (S.I.D), because it is an impeded slew-rate that modifies the rising and receding walls of an other-wise mathematically perfect square waveform. In audio components like amplifiers, the most common stability compensation scheme introduces a principle pole at a frequency that reduces the loop gain as the frequency rises. This compensation works by imparting slew-limiting to attenuate out of band frequencies that could cause the amplifier to self-oscillate. The side effect of this upsurge in stability is the limitation imparted upon slew-rate.